Rails development with Docker

I’ve been busy working on a personal side project recently. It’s an web application written in Ruby on Rails. I played around with Docker for times in the past, and now I want to apply Docker to my development workflow. Below are some useful things that I learned.

Installation

Docker engine does not run directly on OSX, so we need to run it on a virtual machine. The last time I used Docker, I could get Docker up and running with some `brew` commands (`brew install boot2docker`, `brew install docker`…). Since then, some new things got introduced: Docker Machine, Docker Compose… I decided to install Docker as the official documentation recommends: using Docker Toolbox. Docker Machine is used to create Docker hosts, on which the containers are run, on our machine or on cloud providers. After installing Docker Toolbox, it might already create a default Docker engine name `default` using VirtualBox. You can check this using this command:

docker-machine ls

NAME ACTIVE DRIVER STATE URL SWARM

default virtualbox Stopped

We should now start this host:

docker-machine start default

Starting VM...

Started machines may have new IP addresses. You may need to re-run the `docker-machine env` command.

Then we need to set environment variables so that Docker client can work with started Docker host:

eval $(docker-machine env default)

Dockerize our Rails app

To Dockerize the Rails app, first we need a `Dockerfile` file, which specifies the necessary steps to put our app to a Docker image.

FROM ruby:2.2.0

RUN apt-get update -qq && apt-get install -y build-essential libpq-dev nodejs

RUN mkdir /app

WORKDIR /app

ENV BUNDLE_GEMFILE=/app/Gemfile \

BUNDLE_JOBS=2 \

BUNDLE_PATH=/app/vendor/bundle

ADD . /app

RUN bundle install

The above file is pretty simple: it inherits an image (which includes Ruby 2.2.0), then installs some packages required by our Rails app, creates a working directory `/app` and adds the source code to this folder. It finally installs gems specified in `Gemfile`.

At this point we can build our image:

docker build -t my_app .

(Remember to change `my_app` to your app’s name)

Docker Compose

The Rails app needs a PostgreSQL database for all the persistence stuffs. The Docker way to do this is to use a container which serves PostgreSQL service and links our web container to this. Docker has already supported this kind of link (Link Containers), something that we can make use of like this:

docker run --link postgres_container:db -v .:/app my_app rails server

But if we introduce more services (such as Redis, Elastic Search, Sidekiq…) to our stack, this will be not a preferable way. We will have to build (or pull) and start each container manually. It’s time for a simple and automatic way to start our entire application (and define our application stack as well). That’s the reason for Docker Compose. That exactly what Docker Compose does. To get started with Docker Compose, we need a `docker-compose.yml` file in our application root directory:

web:

build: .

command: bundle exec rails s -p 3000 -b '0.0.0.0'

volumes:

- .:/app

ports:

- "3000:3000"

links:

- db

db:

image: postgres

The above file defines two containers: one for our web app, one for PostgreSQL service, which is an official image downloaded from Docker Hub. Every time we start our application with `docker-compose up`, Docker will check if the web container needs to be built and start it, along with database container `db`.

- Note: You may need to update the file `config/database.yml` so that Rails could “see” PostgreSQL on the database container:

development: &default

adapter: postgresql

encoding: unicode

database: my_app-dev

username: postgres

password:

host: db

Workflow

So now our app has been started. It is reachable via the URL http://192.168.99.100:3000. With `192.168.99.100` is the IP of our Docker host (you can obtain this IP with this command: `docker-machine ip default`), and `3000` is the port we specified in `docker-compose.yml` that maps port `3000` on Docker host to port `3000` on web container. Normally using `docker-compose up` is enough to start the app. But I find this difficult when I want to debug errors with `byebug`, or `pry-rails`, ’cause the console doesn’t show up. So I use this instead:

docker-compose run --service-ports -e TRUSTED_IP=192.168.99.1 web

This brings up the linked containers (`db` so far) and starts the web server. The parameter `-e TRUSTED_IP=192.168.99.1` is to pass a environment variable to Rails, so that I can use better_errors gem, to debug errors right on the error pages.

To run other Rails and Rake commands, we use `docker-compose run`. For examples:

docker-compose run web rails console

docker-compose run web rails generate model MyModel name:string

docker-compose run web rake db:migrate

docker-compose run web rake test

Everytime I update `Gemfile` with a new gem, or remove old ones, I need to rebuild the `web` image with `docker-compose build`, and run `docker-compose run web bundle install`.

I also use ActionMailer to send emails, so it comes the need to see how the emails sent out look like. `mailcatcher` (http://mailcatcher.me/) fits this perfectly, and fortunately, the community has already built a Docker image of it: https://hub.docker.com/r/yappabe/mailcatcher/. I add another container to `docker-compose.yml`, update `config/environments/development.rb` to use the mail server at host `mailcatcher` and port `1025`. Now `docker-compose.yml` looks like below:

web:

build: .

command: bundle exec rails s -p 3000 -b '0.0.0.0'

volumes:

- .:/app

ports:

- "3000:3000"

links:

- db

- mailcatcher

db:

image: postgres

mailcatcher:

image: yappabe/mailcatcher

ports:

- 1025:1025

- 1080:1080

From now on I can check the emails sent out by looking at http://192.168.99.100:1080/. Thanks to Docker, I don’t have to spend time set `mailcatcher` up manually.

Time for Tests

To apply continuous testing, I use Guard with Minitest. I add another container named `test`, which is almost the same as `web`:

web: &app_base

build: .

command: bundle exec rails s -p 3000 -b '0.0.0.0'

volumes:

- .:/app

ports:

- "3000:3000"

links:

- db

- mailcatcher

db:

image: postgres

mailcatcher:

image: yappabe/mailcatcher

ports:

- 1025:1025

- 1080:1080

test:

<<: *app_base

ports: []

command: guard start --no-bundler-warning --no-interactions

environment:

RACK_ENV: test

RAILS_ENV: test

I run `docker-compose build` to build the images, and start `test` container on a separate tmux pane:

docker-compose run test

This works as expected. Tests are run, with dots, errors and failures. However, it also adds a new problem…

Gotchas

Update: As of 20/01/2016, I finally could get rid of this problem completely on OSX. The solution is I’m not stick with VirtualBox anymore. Instead, I use xhyve to create the virtual machine that runs Docker. xhyve is even more lightweight and faster compare to VirtualBox. Details of installation xhyve for Docker can be found here: https://allysonjulian.com/setting-up-docker-with-xhyve/

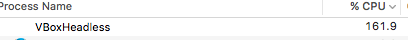

The problem is that it takes so long for the tests to run, and the CPU fan of my Macbook works like the machine is under heavy load. I have a look at `Activity Monitor` and find out that `VboxHeadless` is consuming more than 160% of CPU:

Further research takes me to the conclusion that this relates to a wontfix bug of vboxsf (a filesystem implemented in VirtualBox). The bug causes the syncing of files from host machine (OSX) to guest machine (Docker host) very slow and consume a lot of resource. There’s no perfect way to get rid of this bug (as long as I’m stick with OSX and VirtualBox). In my case, I decided to use docker-osx-dev, a tool by Yevgeniy Brikman who also tried a lot of solutions for this problem. The main idea of the tool is to use rsync, along with fswatch to sync files from local machine (host) to Docker host (guest).

This is much faster compare to `vboxfs`, but it also comes with a trade-off: it can only sync from host to guest, which means that files generated from containers are not synced back to OSX. We will not see those files on our text editors.

Docker-osx-dev says it will install Docker, Boot2Docker, Docker Compose, VirtualBox, fswatch, and the script `docker-osx-dev` itself. I don’t need to install all those except fswatch and the script, so I just install what I need:

brew install fswatch

curl -o /usr/local/bin/docker-osx-dev https://raw.githubusercontent.com/brikis98/docker-osx-dev/master/src/docker-osx-dev

chmod +x /usr/local/bin/docker-osx-dev

Then I start a new tmux pane in my project directory with `docker-osx-dev` command, it automatically picks up the directories in `docker-compose.yml` file, asks me to disable shared folder on Docker host vm, I choose yes, and it starts to sync files. When the files are synced, I start the `test` container again. This time it doesn’t surprise me! :-P

Conclusion

Docker helps save time to setup working environments and workflow. This means a lot in the context of a team working on a project. However it would be better to run Docker on a native Linux machine.